OpenFaaS-as-a-Service: what to know before building on top of the framework

Launched in 2017, OpenFaaS reached the popularity thanks to the simplicity according to which developers can quickly start up a fully functional serverless system, completely Function-as-a-Service oriented. In this article I do not want to show all the features that the framework offers, I want to make clear what are those features and those characteristics that the framework has when you want to use it as-a-Service.

Suppose that we have to create a car-plates recognition application using OpenFaaS. The idea is to use the resources of the smart cameras deployed in the city. The city authorities send a black list of car plates to the smart cameras. Each smart camera analyzes the video stream in real-time and if they identify one of these plates, an event is transmitted to the cloud infrastructure utilizing a narrow-band communication channel such as NB-IOT.

The problem in this Fog deployment is that some times some cameras will need to conduct multiple plate recognition - that is, when there are multiple cars in their view. While some other times, some cameras might have an empty view, thus not conducting any video analysis task. In order to better utilize the resources, we want to load balance the video analysis tasks between the smart cameras. How you should use OpenFaaS to achieve this result? You have to write your custom scheduler of FaaS.

Motivated by this need, in this article we will see how to build a new service that works on top of OpenFaaS and that does not depend on its backend infrastructure. In this case we want to realize a scheduler that implements a cooperative load balancing, but the suggestions that I will give can be extended to any service that has to rely on OpenFaaS.

Before seeing how a service like this should be defined let’s have a glance to how Docker Swarm and OpenFaaS work together.

Due to the multiple backends that OpenFaaS can support, here for sake of simplicity I will only focus the attention on Docker Swarm since it is simpler to be deployed.

The Docker Swarm realm

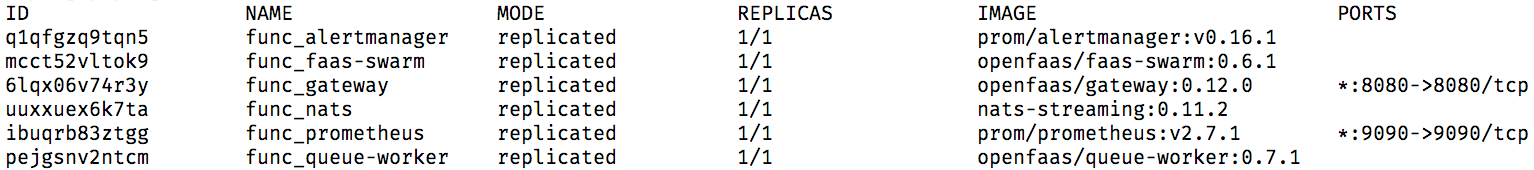

The OpenFaaS framework is made by different components and every component is deployed as a Docker Swarm service. You can think about a service like a data structure which includes a docker image, environment variables, mounted volumes, open ports, and many other parameters. Some components which are included in OpenFaaS are the REST API service (func_gateway), the prometheus service (func_prometheus), the queue service for asynchronous function calls (func_queue-worker) and the alert manager service (func_alertmanager).

The list of the core services that are deployed in the swarm by OpenFaaS (output of the command docker service ls).

In the Docker Swarm realm, a service can be global or replicated: if the service is global, then we will have a unique instance of the service (that is actually called task ) across all the nodes in a swarm, otherwise, a replicated service can exist in different replicas across the swarm. Docker Swarm only schedules tasks, deciding in which node of the swarm they can run, also performing load balancing. This scheduler can be customized and you can also choose to do that, but you will agree with me that a more portable solution is to decouple your scheduler (or your generic service) with the underlying infrastructure, in such a way it will work even if OpenFaaS is deployed on Kubernetes since you only make reference to the framework and not to its backend.

Functions: principle of operation

When a function is deployed in the OpenFaaS framework, a new service is contextually installed in the swarm according to the following philosophy.

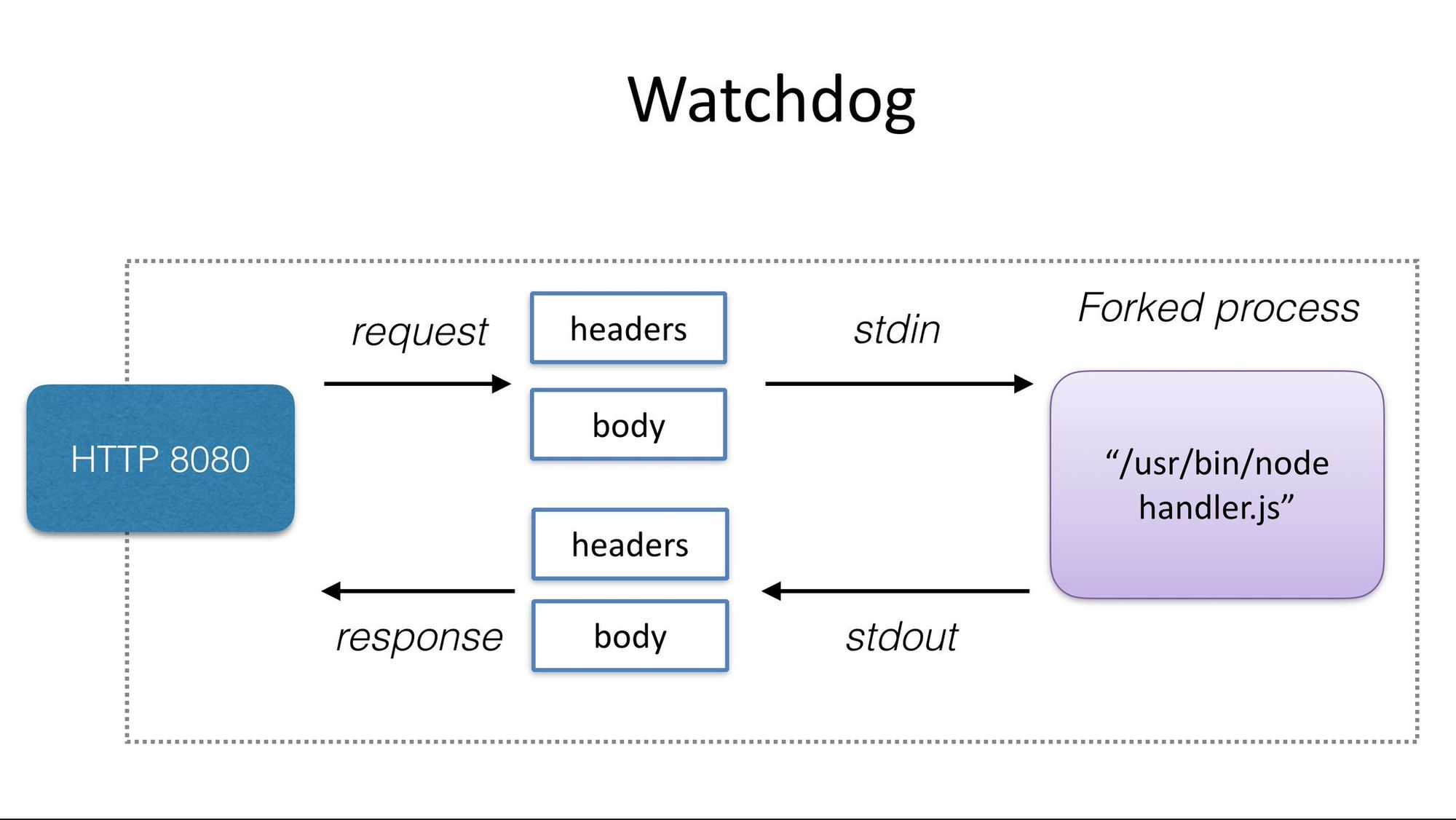

Every time a serverless function is invoked, a new container is started. When the function terminates, the container is terminated as well. This creates delays for the invocation of the function. In order to remove this unwanted delay, OpenFaaS injects, in the function’s Docker image definition (that is anyway wrapped in a pre-built template), a watchdog, that is a simple web server which translates HTTP GET or POST requests to function invocation by also returning the function output as an HTTP body. Since a web server is a continuously run application because it endlessly listens to a port, the function’s Docker container is never shut down. Moreover when a function invocation rate exceeds a certain threshold, the service is scaled up in order to improve the throughput and as we already said, this new replica of the function container will be allocated by the Docker Swarm scheduler to the less loaded node, if it exists (the key property of this process is that the function will be available at the same port and address, in spite of the fact it is replicated across the swarm – this is transparently achieved by the Docker Swarm internal DNS). However, when the function watchdog receives an HTTP request, that is the actual call that a client does when it wants to execute a deployed function, the function will be executed by forking the web server process as it would normally happen when a process is started, in this way multiple parallel running functions are executed (this behavior characterized the classic watchdog approach introduced by OpenFaaS, but it has been progressively replaced by a new kind of watchdog that also eliminates the delay of forking by reusing processes and resources – this is called of-watchdog).

The OpenFaaS watchdog diagram, from the official website.

So a function is a service and as all the Docker Swarm services it can be scaled up with different replicas, but as you noticed this does not allow you a fine grain scheduling mechanism of the functions: what if you want to implement your own scheduler in which the scheduler decision is done per-function invocation request? You should build your scheduler as a service that works on top of OpenFaaS exploiting its APIs and we will see how to do this the section. However, the functional paradigm of OpenFaaS that make http function invocations something like process forks is a winning strategy that allows exploiting the processor sharing paradigm without any additional overhead of stopping and re-starting containers.

Deploying custom services

The key idea of using OpenFaaS as a FaaS provider is to install another service in the Docker Swarm that is able to receive requests from the outside like OpenFaaS normally does, and at the same time to delegate the framework of executing them. This process can also be enriched with any custom scheduling algorithm or decision, and the only overhead is given by the fact that our service needs to directly talk to OpenFaaS via its REST API, but this will be the matter of the next section.

Suppose that we want to build a custom scheduler called CustomScheduler that uses OpenFaaS for executing functions. We can summarize the key things of our service in this way:

- it should be deployed like a swarm service in the same swarm where OpenFaaS is installed;

- it should talk with OpenFaaS with REST API;

- it should not introduce any intensive operations which can slow down the function execution paradigm;

- the scheduling process should be transparent to users, except for the fact that their requests must be sent to a different port with respect to the OpenFaaS one;

- the scheduling process should be done per-function invocation, in other words after a client calls a function, the scheduler decides where to execute it according to a custom policy, and this execution can also imply a call to a scheduler of another node;

My advice is to build the CustomScheduler with Go, a compiled language which offers a very good tradeoff between high performances and ease of use, but I will not go in deep in implementation details in this post and we assume that the service is ready and packed in a docker image.

In the next code snippet is shown the docker-compose.yml file which is used for declaring services or stacks of services that can be deployed both with the docker-compose utility or in the case of a Docker Swarm with the command docker stack deploy. Our service is called custom-scheduler, it listens to the 18080 port and it uses the custom-scheduler:latest image.

version: "3.3"

services:

custom-scheduler:

image: custom-scheduler:latest

environment:

env: dev

ports:

- 18080:18080

networks:

- func_functions

secrets:

- basic-auth-user

- basic-auth-password

deploy:

restart_policy:

condition: any

delay: 5s

placement:

constraints:

- "node.role == manager"

- "node.platform.os == linux"

networks:

func_functions:

external: true

secrets:

basic-auth-user:

external: true

basic-auth-password:

external: true

A docker-compose.yml file of a service that is able to access to all the OpenFaaS features

Two crucial elements must be defined in our service in order to allow it to interact with the existing OpenFaaS installation:

- the virtual network to which the container will be attached;

- the OpenFaaS secrets that are needed for authenticating its REST API.

When a container is created you can choose to attach it to a virtual network by specifying the name of the Docker network that you created in the field networks of the docker-compose.yml file. Since OpenFaaS has been already deployed and it created its own network, in order to make our service to talk with it we need to specify the network func_functions that is the name of the OpenFaaS network and declare it as external (as you can see at the end of the file). In this way, we have access to the framework REST API, from our service, by using the base URL http://faas-swarm:8080.

Another key element that is needed for accessing the framework API is the authentication. When OpenFaaS is deployed for the first time it creates two Docker secrets, one that contains a username (admin by default) and one that contains a password (that is usually a random hash). A Docker secret is essentially a blob of data that is accessible only by the containers that are explicitly allowed to use it. Secretes are available as files in the directory /run/secrets. For example, OpenFaaS creates two secrets:

basic-auth-userthat will be mounted to/run/secrets/basic-auth-user;basic-auth-passwordthat will be mounted to/run/secrets/basic-auth-password;

For this reason in our docker-compose.yml file, we declare the two secrets as external, in this way we are granting to our service the access to OpenFaaS credentials.

Interacting with OpenFaaS

Your CustomScheduler service can easily interact with OpenFaaS framework by relying on its REST API. The main APIs offered by the framework are:

/system/functionsto manage existing functions and deploy new ones;/system/function/{functionName}to get the information of thefunctionNamefunction;/function/{functionName}to execute thefunctionNamefunction – you can use bothPOSTandGETmethods but obviously the former is preferred when you need to specify function parameters;/system/function/scale-function/{functionName}to trigger the scaling of thefunctionNamefunction – in OpenFaaS the scaling is automatic as we already discussed, but it can be forced with aGETto this api;

In order to be able to use these APIs your scheduler needs to add to the HTTP header of every request the following field:

Authorization: Basic <hash>

Where the <hash> is the Base64 encoding of the string <username>:<password> and both the username and password can be found in the /run/secrets folder, as already discussed. If you use Go and the standard net/http package this can be easily achieved by a function like this:

func SetAuthHeader(req *http.Request) {

auth := config.OpenFaaSUsername + ":" + config.OpenFaaSPassword

req.Header.Set("Authorization", "Basic "+base64.StdEncoding.EncodeToString([]byte(auth)))

}

Conclusions

Due to flexibility of the OpenFaaS and its backend, like Docker Swarm (or Kubernetes), is really easy to use this framework as-a-Service, and as we’ve seen the key idea is essentially continuing to use the same approach that OpenFaaS use on its own: create a service, make it talk with the framework with REST and OpenFaaS will do the remaining job giving you a stable and efficient implementation of the FaaS paradigm.

I hope that with this post it will be clearer for anyone who wants to build a custom scheduler or a custom stack of services which need a FaaS implementation, how to accordingly exploit all the functionalities that the OpenFaaS framework offers and how to build a solution that is as much as possible independent from the infrastructure where the framework is installed.